PySpark Tutorial

Introduction to PySpark

PySpark is the Python interface to Apache Spark, an open-source framework used for large-scale data processing and machine learning.

Apache Spark provides an API for Python that enables it to interact with various programming languages like Java and Scala. It creates data structures similar to Pandas’ data frames while supporting various operations.

PySpark is an indispensable solution for data processing and machine learning at scale, easily reading and analysing CSV files.

The goal of CSV file reading should be to recognise header values; in case they cannot, users can select an option that makes the first column’s first row value its header value.

Reading a data set, users will see its complete layout with columns such as string h string. All data will be saved into a DataFrame similar to Pandas but with different properties (pyspark.sql instead).

They may then create their test data set with columns like name, age and experience for comparison purposes.

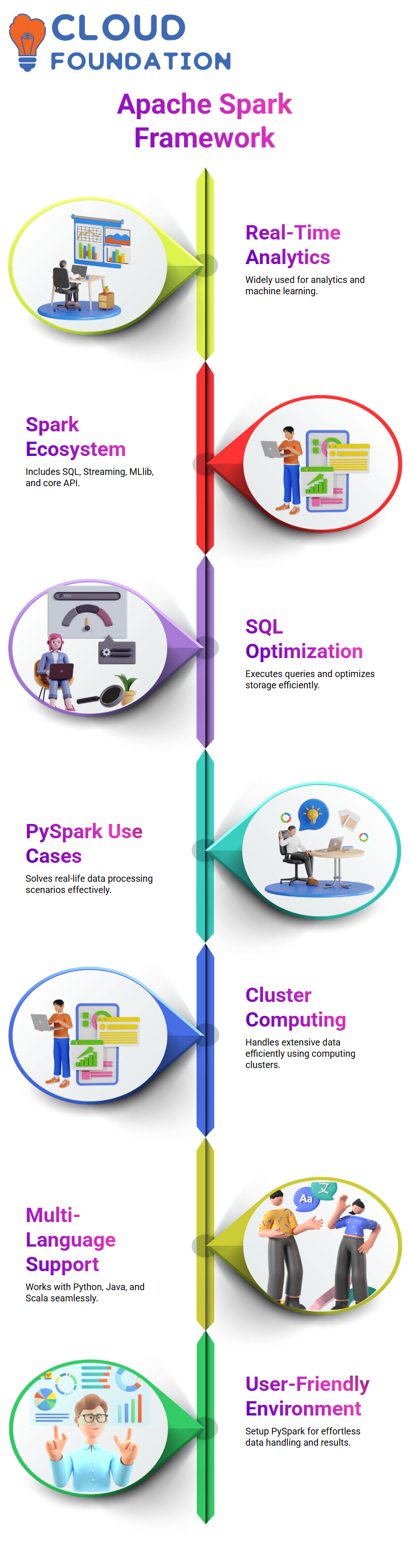

Apache Spark Overview

Apache Spark is an agile framework in real-time analytics and machine learning industries, including real estate.

The Spark ecosystem includes various components—Spark SQL, Spark Streaming, MLlib graphics libraries, and the core API component are just a few.

Spark sequel component builds upon decorative queries to optimise them and reduce storage overhead by running SQL-like queries over its data set, presented via Spark Data presentation.

PySpark can be implemented to address real-life use cases using Spark; our Spark Data presentation can be seen here or from external sources like IDs.

Apache Spark is an extremely flexible framework widely utilized for real-time analytics and machine learning applications. Here is its functionality and instructions for installing it in your systems.

Apache Spark is a versatile and efficient cluster computing system that can handle large amounts of data and provide high-level APIs in:

By creating a new environment and using the PySpark library, users can efficiently work with Apache Spark and achieve their desired results.

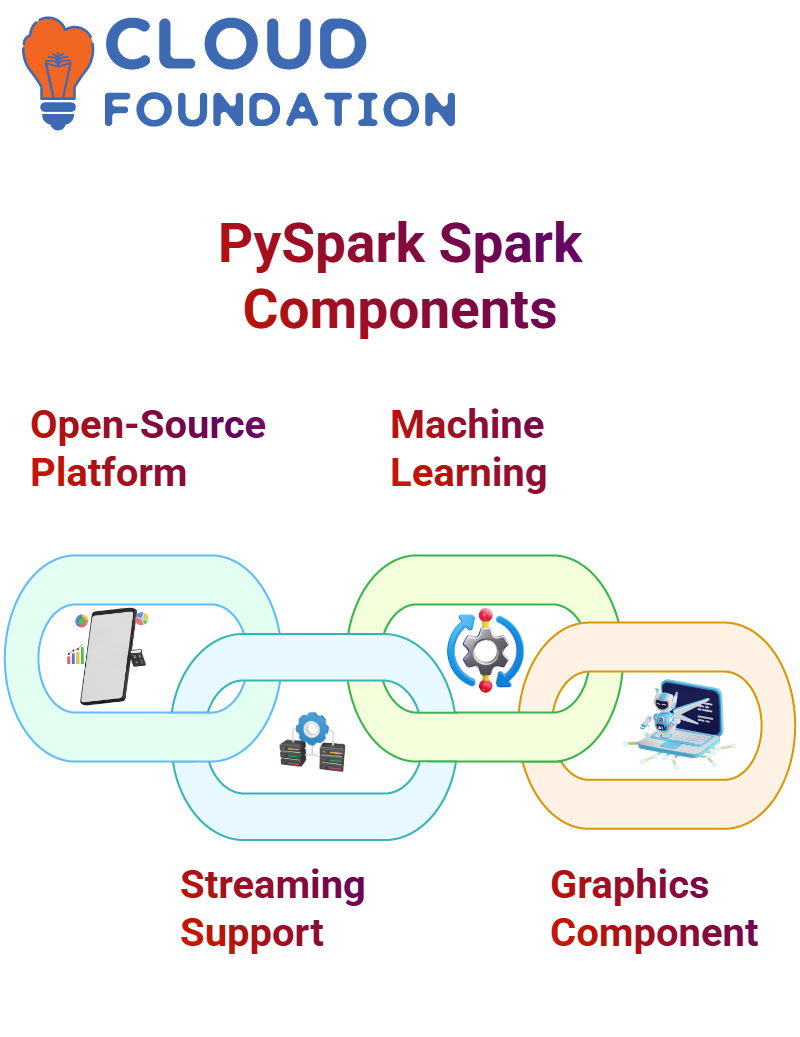

Spark Components in PySpark

The Spark platform is an impressive collaboration of Spark and Python technologies. Spark is an open-source cluster computing framework focused on speed, usability, streaming analytics, and library integration that provides many libraries to facilitate machine learning and real-time applications.

Spark streaming enables developers to easily perform batch processing and data streaming within one application. At the same time, a machine learning library makes developing and deploying scalable machine learning pipelines easier than ever.

The graphics component allows users to work with graphed and non-graph sources, providing flexibility and resilience during graph construction.

Spark is at the core of the Spark ecosystem, responsible for basic input/output operations and scheduling and monitoring across the Spark ecosystem. Furthermore, its execution engine supports different languages like Scala.

Spark API gives users access to Spark’s easy interface in any programming language they choose, providing access to its simplicity.

PySpark shell

Pyspark shell is essential for creating and managing Spark applications, offering users easy ways to work together.

Once activated, it opens an Eclipse notebook, making collaboration simple. However, rather than becoming your go-to solution when working through issues, it should serve as an aid and be returned later once you’ve finished.

This timeline details the installation path of Pyspark and covers various topics surrounding Spark.

At the heart of every Spark application lies its Spark context, which creates internal services while connecting to an execution node.

Spark context allows Spark driver applications to gain access to cluster resources via resource managers, such as cluster managers or drivers; once connected, these drivers run operations while using the PI for Jay to launch JVM instances.

Spock home files are discussed, along with setting an environment in which the path and size of the serialiser configuration gateway are also set.

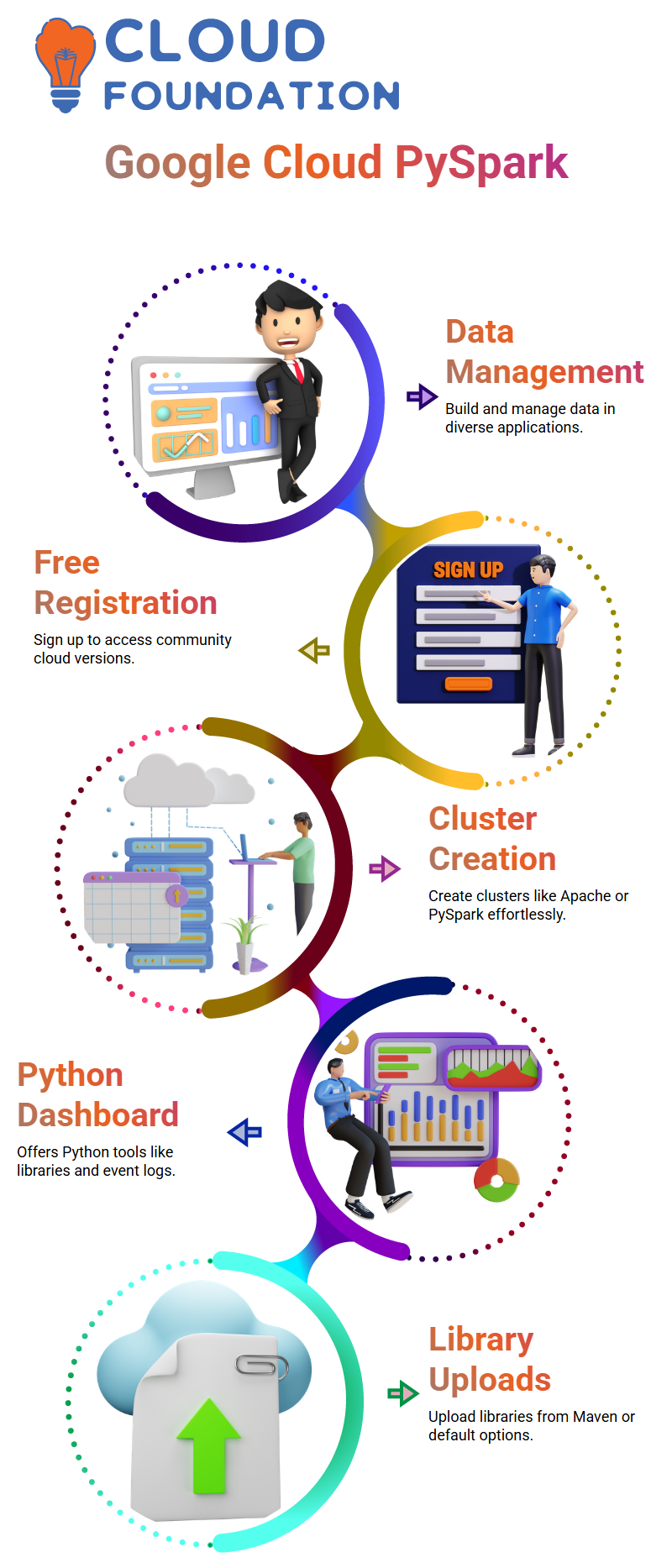

Google Cloud in pyspark

Google Cloud provides an effective means of creating and managing data across applications, with features including creating notebooks, tables, clusters and running machine learning flow experiments.

Beginning the community version is easy; users register by providing their details at a designated URL and selecting their cloud version(s).

After becoming registered users, they can select three platforms they need for free collaboration purposes and decide how long their unrestricted use should continue.

The community version is intuitively designed for ease of use and offers features such as the Explore Quick Start tutorial, key research data sets, and blank notebook creation.

The Community Version allows users to easily create notebooks, tables, clusters, and ML flow experiments, import libraries, read documentation, and perform various tasks.

Users can easily create their cluster by clicking the “create a cluster” button and providing their preferred name, such as Apache or Pyspark cluster. In the dashboard, there are tools for working with Python notebook libraries, event logs and Spark UI driver logs.

Users can upload Pi libraries using Maven, which will work in Java. Users may choose different workspaces (TensorFlow or Kara’s, for instance) before installing libraries tailored explicitly for these. Default work with Pyspark will mean no libraries are installed automatically.

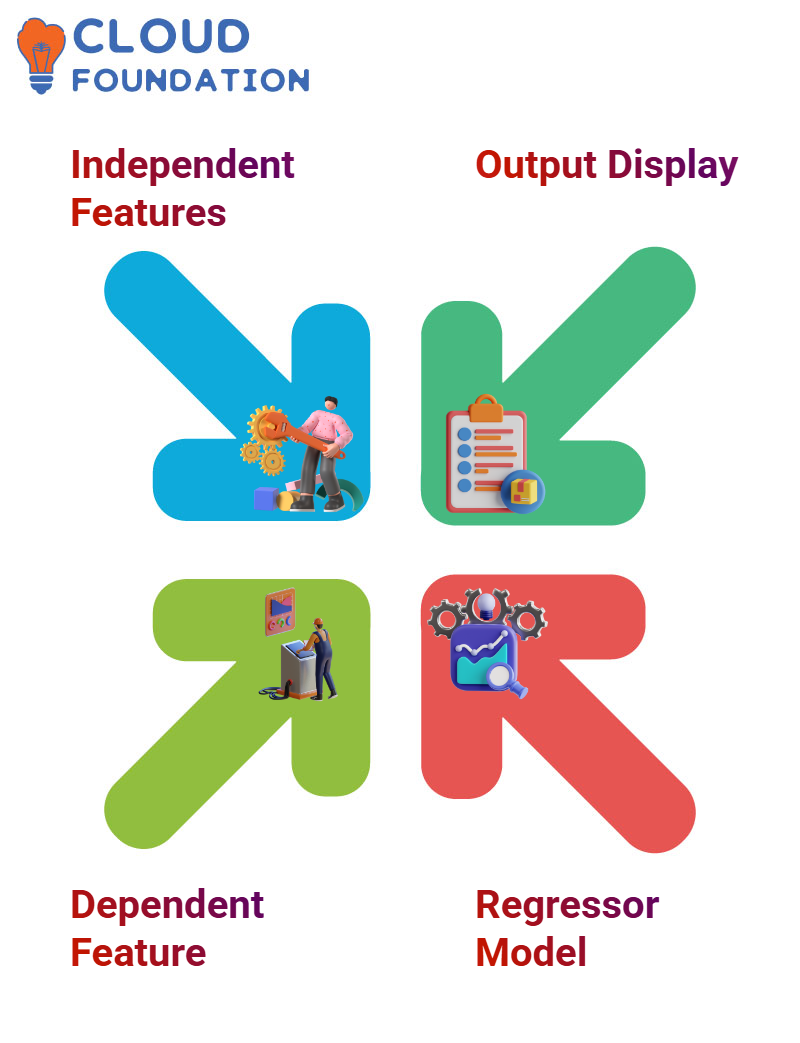

Independent Features in PySpark

Pyspark allows users to develop groups of features using its specifications; examples of groups of features would include tip, size six, underscore index (for smoking and digestion of nicotine products), underindexes for tobacco smoking and digestion, and time underscore index.

All features created through Pyspark must meet this specification for completion.

The output dot displays all features alphabetically with tip as the initial feature; its counterpart, output dot select, displays all available features that could appear in output.

Output features serve as dependent features while independent ones remain independent features.

To create the dependent feature, gather the finalised data in two columns: output dot Select. This column includes Indie Band features (indie band features) and Total Under Bill Bills.

To add Indie Band Features into this chart. To do this. select: Indie band features (indie band features) and Total Under Bill (total underbills).

Fitting train data to create the regressor model may take time; however, information gleaned from training and testing of independent features is displayed using UDT format for use as reference material.

Lazy evaluation

Lazy evaluation is a technique employed in programming to optimise system performance. It refers to delaying operations when they are called, optimizing a system’s performance by deferring their implementation as soon as they occur.

Lazy evaluation entails loading data only when necessary, helping optimise operations by deferring loading until necessary and recovering lost information more effectively.

Furthermore, this approach assists in recovering lost files by partitioning access so operations are applied directly to them.

At first, an anomaly is instructed separately from its path to teach it back to its driver. The computation results are then applied and passed back onto them via pipeline storage or distributed file systems.

Lazy evaluation uses several key transformations and actions, including light maps, flat maps, filter distinct seduced by key map filters, and partition do not worry partitionings.

Some essential features of PI include collecting as a map, reducing count, taking by key or value, and spark RDD, which is indispensable for providing anomaly identification capabilities.

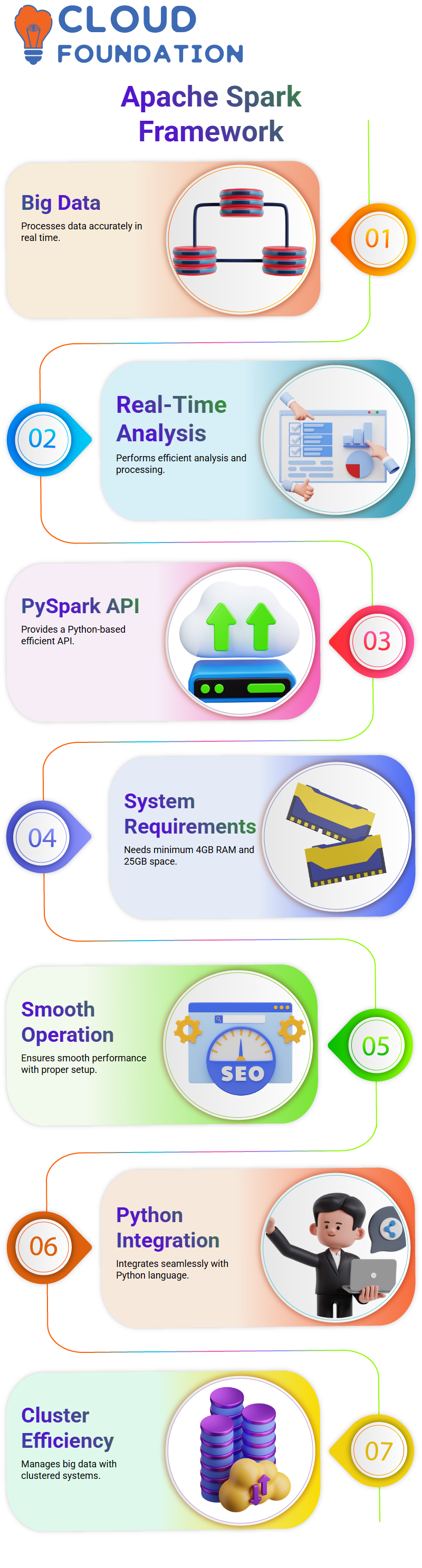

Apache Spark framework

Data production has skyrocketed rapidly over recent years, making accurate analysis and processing increasingly necessary.

Apache Spark is one of the best frameworks for tackling big data in real time and conducting analyses quickly.

Apache Spark offers an effective Python Spark API (PySpark API).

Users wishing to set up the PiSpark environment need to understand its system requirements, which include at least 4GB RAM with 8GB available, at least 25GB free disk space, and at least three processor cores to allow smooth operations.

Apache Spark is an invaluable real-time big data processing and analysis solution, and understanding both hardware and software requirements for setup is critical for smooth performance of this platform.

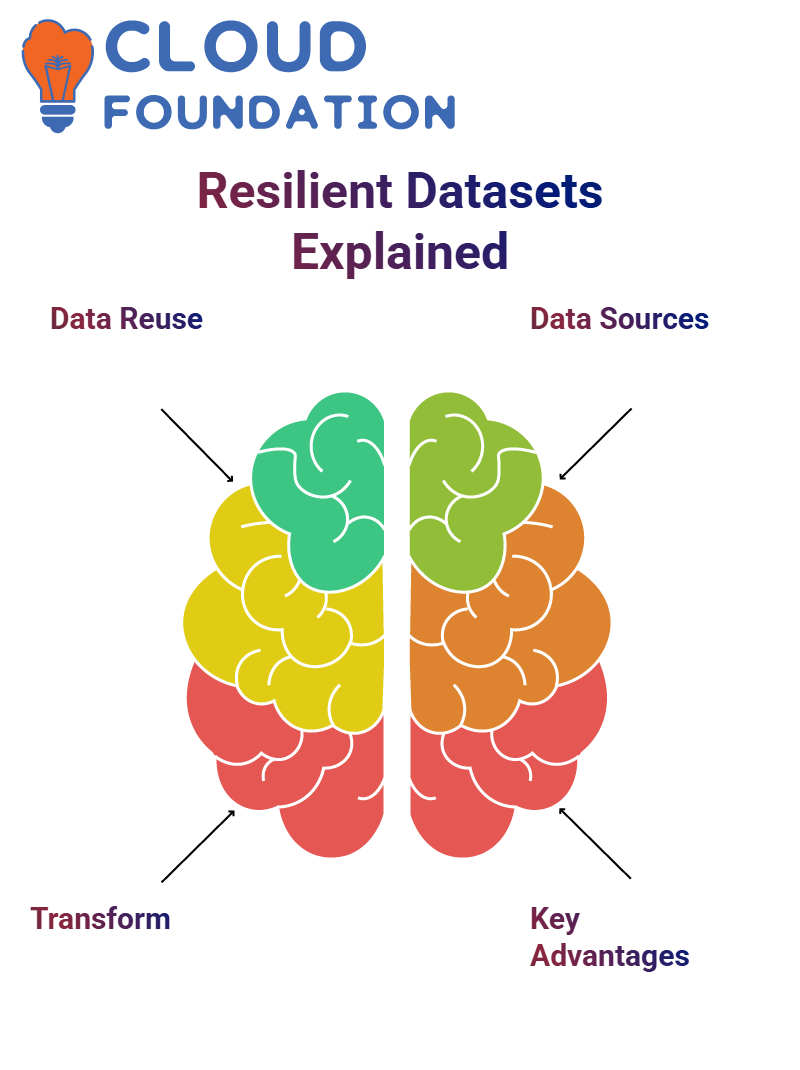

Resilient Distributed Datasets in PySpark

PySpark RDDs (Resilient Distributed Datasets) allow users to reuse data across operations, with efficient performance and persistence guaranteed through RDDs.

RDDs support transformations like maps, filters and groupBy on data sets while managing coarse-grained operations efficiently.

RDDs can be created through three approaches: parallelized collections, existing collections or RDDs themselves.

External data sources like SDFS, Amazon S3 or HBase may also be utilized. RDDs offer advantages like in-memory computation, fault tolerance, and reusability, which make them worthwhile investments.

Handling complex operations and maintaining persistence is often challenging; developers should therefore create RDDs from various sources, including collections or external data stores, to ease these difficulties.

Conclusion

PySpark provides an efficient and scalable data processing and machine learning solution, harnessing Apache Spark for real-time analytics applications as well as big data applications.

Utilising Python allows for an unrivalled real-time environment aimed at large scale processing tasks and machine learning algorithms.

With its versatile set of components, such as Spark SQL, Spark Streaming, and MLlib libraries, PySpark facilitates data manipulation, real-time processing, and the construction of machine learning pipelines seamlessly and without friction.

PySpark’s support of Resilient Distributed Datasets (RDDs) further increases its capability of handling distributed data while offering fault tolerance and superior performance.

PySpark stands out as an invaluable tool for modern data scientists and engineers who want to efficiently and quickly address complex data challenges.

Thanks to seamless cloud integrations such as Google Cloud and an intuitive shell user experience, modern data scientists and engineers are provided with everything they need to work efficiently with complex datasets.

Navya Chandrika

Author