Apache Flume Training | Learn Apache Flume Course

About Apache Flume

Apache Flume is Apache Software Foundation’s open-source data-collecting technology. This tool collects, aggregates, and moves massive amounts of log data from diverse sources to HDFS (Hadoop Distributed File System) for processing and analysis.

Flume can handle everything from log gathering to complicated event processing with its powerful and flexible design. Apache Flume’s primary features, benefits, Prerequisites, Modes of Learning, and Certifications will be discussed in this blog.

This Apache Flume Technology overview can help you learn about data-gathering technologies or improve log data management.

Benefits of Apache Flume

Using Apache Flume technology has several advantages, such as:

1. Scalability: Apache Flume is scalable, meaning it can manage massive amounts of data. Big data environments benefit greatly from their ability to gather and transfer data in real time from hundreds of servers.

2. Flexibility: Apache Flume is versatile and can read data from a variety of sources, including files, SPNEGO, Kestrel, and Avro. It is a versatile solution for numerous use cases because it offers several sinks for different data storage systems.

3. Processing Data in Real-Time: Apache Flume can gather and process data in real time, guaranteeing that data can be analyzed immediately upon generation.

4. Durability: Apache Flume can withstand data loss and is built to be Reliable. To prevent data loss in the case of a failure, it employs a trustworthy, permanent commit log.

5. User-Friendly: Apache Flume is a piece of software that is straightforward to set up and use. It streamlines the process of configuring and managing data collecting and processing by providing a straightforward and adaptable setup mechanism.

6. Extensibility: Users can create their agents, sources, and sinks to fit unique use cases with Apache Flume’s highly extensible design.

7. Low-latency: Data collection and processing with Apache Flume is designed to be as low-latency as possible. It is perfect for use cases that demand quick data processing because it can gather and process data in real time.

8. Integration: For big data contexts, Apache Flume is a popular choice due to its ease of integration with other technologies like Hadoop, HBase, and Avro.

Prerequisites of learning Apache Flume

You will need the following to begin working with Apache Flume technology:

1. Familiarity with Java programming: First and foremost, you must be well-versed in the Java programming language. This is because Apache Flume is built in Java. Classes, objects,

methods, and interfaces are all part of the Java ecosystem, and you should be familiar with them.

2. Basic understanding of big data technologies: Apache Flume is frequently employed in large data settings; thus, it might be beneficial to possess a fundamental comprehension of big data technologies like Hadoop, HBase, and Avro.

3. Knowledge of data collection and processing concepts:You can’t hope to work with Apache Flume without a strong understanding of the fundamentals of data gathering, aggregation, and processing. It helps to have some background knowledge of data streaming and data pipelines as well.

4. Familiarity with UNIX/Linux: The ability to work with UNIX/Linux is a prerequisite for using Apache Flume, though it is compatible with other OSes. Being proficient with Linux’s file system and command line might be useful.

5. Familiarity with fundamental networking concepts: With Apache Flume, you can gather and transfer data through network connections. A basic understanding of TCP/IP, ports, and protocols can be useful in this context.

6. Knowledge of the Apache Hadoop ecosystem: Although it is not specifically needed, it can be helpful to have a solid understanding of the Apache Hadoop ecosystem when utilizing Apache Flume. Knowledge of Hadoop MapReduce, HDFS, and the rest of the Hadoop ecosystem is required.

You will be more prepared to study and use Apache Flume technology if you have a clear understanding of these topics.

Apache Flume Training

Apache Flume Tutorial

What is Apache Flume?

The Apache Software Foundation has developed Apache Flume, an open-source, publicly available data collection engine. Its main function is to collect large amounts of log data from various sources and forward it to a central data warehouse such as Avro, Hadoop Distributed File System “HDFS”, or HBase.

The Importance of Data Ingestion for Businesses

Data ingestion is a crucial process that helps businesses get data into their specific systems, as it can be generated from sources and stored in software.

If the data becomes too large, it will not be able to process it. To bring in the data into a specific platform, businesses need to ingest the data.

Handling Structured and Unstructured Data in Business

The data generated by various business sources can be either structured or unstructured. Traditional tools like databases, DBMS databases, and SQL-based databases are used for handling structured data.

These traditional systems have been used by people using businesses for years. However, for analytics, data ingestion is needed for tools that can pull data into a big data system.

Effectively Managing Data with Apache Flume for Data Ingestion

Apache Flume is a tool that helps in importing and exporting data between a DBMS system and a big data platform like Hadoop.

The Apache Flume Tutorial emphasizes that Scoop is only compatible with structured data and that it is only working with structured data.

Data ingestion is a crucial process for businesses to efficiently manage and process data from various sources.

Apache Flume is a tool that helps in ingesting data from various sources, such as RDBMS and HDFS, and enables businesses to analyze and process this data effectively.

Handling Unstructured Data in Big Data Platforms

Traditional systems are not designed to handle unstructured data, which cannot store all the data. Therefore, specialized tools are needed to store and handle this data, such as SQL databases or big data platforms.

Processing unstructured data is another challenge, and specialized tools are necessary for big data platforms to process it. This involves collecting, storing, aggregating, and dumping the data into a determined storage system.

Apache Flume and its features:

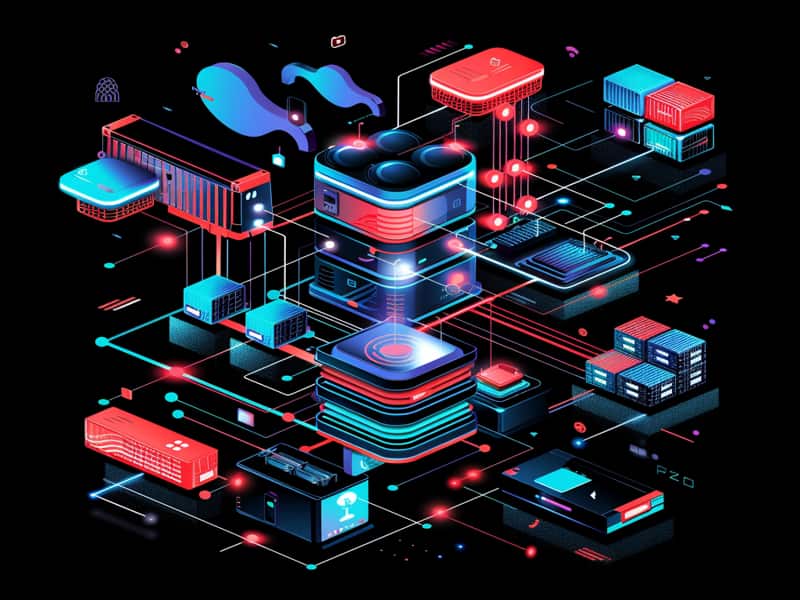

Apache Flume is an open-source tool that collects unstructured data and uses it to stream live data from various sources, such as cloud-based instances, social media, servers, and client servers. These data are generally unstructured.

Apache Flume agents aggregate the data and push it into a distributed Hadoop cluster, which can be directly drained on HDFS or used with HBase, a distributed database on top of HDFS.

The transactions in Apache Flume are monitored by reliable sources, maintaining transaction details for the service, the data center, and the receiver.

This information can be used to guarantee message delivery and ensure that the data is reaching the destination correctly.

Flume acts as an agent, helping to streamline the flow of data into HDFS, which is a batch-mode system.This can be fast due to Hadoop systems’ capability to store data.

The agent works as a collector, slowly passing the data to ensure no data points are missed and all data is stored.

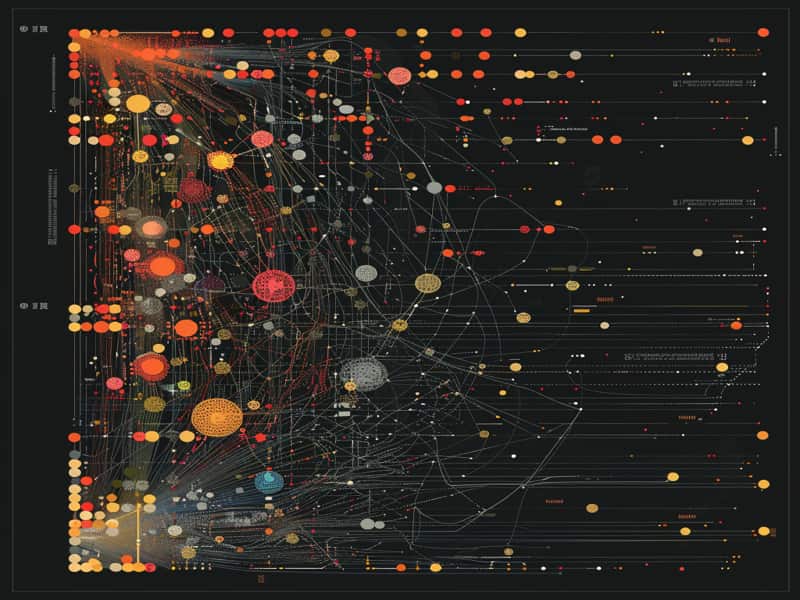

Apache Flume Data Flow Concepts

Data flow concepts are essential in understanding multiple data flows in Apache Flume. Data in agents is collected by an intermediate node called a collector, which stores the information temporarily.

Collectors are similar to agents, but they are different components that come into play. They are systems where temporary storage is temporarily put in place.From these collectors or channels, the data is pushed to the destination via sync.

The first type of data flow is multi-hop flow, which involves collecting data from the source, temporarily storing it, and pushing it to the source.

Multi-hop flow refers to data hopping between multiple agents within Apache Flume, where the source and destination are different agents. This process can occur before it goes to the final destination location or after.

Flume vs Scoop: Understanding the Key Differences.

In comparison to Scoop, Flume is an import-export option which means export is also available. It works with structured data and is agent-based, meaning you have a dedicated agent density and can push data when an event is created.

Flume works with offline data, while Scoop works with online or live data.

The difference between Flume and Scoop lies in the fact that Flume is event-driven, meaning it only comes into the picture when an event is created, and the data has to move right.

In contrast, Scoop is event-driven, meaning you can execute commands whenever you wish to pass data.

The destination of data in Flume can be your HDFS from SQL to HDFS or from HDFS to SQL. Flume can consume data from various channels and sources, while Scoop can only consume structured data.

Apache Flume Online Training

Modes of learning Apache Flume

Like many technologies, Apache Flume has numerous ways to learn and master it. Instructor led-live training and self-paced learning are universal.

Self-Paced

In self-paced learning, you’re in charge of setting your goals, and objectives and monitoring your time. You can customize your learning participation to fit your personal needs, preferences, and speed of understanding.

This approach permits you to take breaks when you require them, spend more time on challenging points, and move rapidly through the material that doesn’t seem too difficult.

Self-paced learning frequently includes utilizing assets like online courses, instructional exercises, books, or recordings that permit you to learn freely, without the limitations of a conventional classroom setting.

It’s all about encouraging learners to take control of their lessons and learn in a way that works best for them.

Instructor Led-Live Training

In instructor-led live preparation, a learned educator leads the learning encounter, giving organized lessons and direction all through.

This arrangement regularly includes planned classes where members connect remotely or in individually, depending on the setup.

The teacher delivers presentations, promotes discussions, gives demonstrations, and allocates works or exercises to strengthen learning.

Members have the opportunity to participate effectively in the structure, ask questions, and collaborate with peers, creating an energetic and intelligent learning environment.

The instructor’s nearness guarantees responsibility, inspiration, and personalized boost, thereby increasing the feasibility of the entire training program.

Apache Flume Certification

An official credential that verifies one’s competence with the Apache Flume data collection engine is known as an Apache Flume technology certification.

The are many aspects of Apache Flume that can be covered in a certification program, including but not limited to installation, configuration, operation, and troubleshooting.

Exams for the Apache Flume certification could include questions about:

- Getting Apache Flume up and running

- Getting to know the inner workings of Apache Flume

- Putting in place data collectors and sources

- Using and configuring different sinks

- Oversight and resolution of Apache Flume issues

- Implementing Apache Flume for use with large datasets

An individual’s competence and knowledge in the technology can be showcased to prospective employers, clients, or coworkers by earning an Apache Flume certification.

Apache Flume certifications often fall into the following categories:

1. Certifications focused on specific jobs:Apache Flume are known as role-based certifications. Administrators, developers, and architects working with Apache Flume, for instance, could be eligible for specific qualifications.

2. Certifications focused on certain technologies: These certifications address particular uses of Apache Flume, like its integration with Hadoop or its ability to analyze and collect logs.

3. Certifications tailored to a particular industry: Some groups and associations may provide this kind of certification, with an emphasis on industries or use cases that are unique to Apache Flume.

Those working in healthcare, for instance, who receive and handle sensitive patient data using Apache Flume may be required to get credentials in the field.

One way to demonstrate to potential employers and clients how competent you are with Apache Flume technology is to get the appropriate certification, which should be based on your career aspirations and area of specialty.

Apache Flume Course Price

Ravi

Author

Every Click, Every Scroll, Every Moment you Spend here is an Opportunity for Growth and Discovery. Let’s Make it Count.