What Is Complier Design?

What Is Complier Design?

Compiler design refers to creating software systems capable of translating high-level programming languages to machine code efficiently and precisely while prioritizing efficiency and accuracy for minimal human intervention or debugging requirements.

Efficiency and accuracy are central elements of compiler design. Machine code generated must run optimally to maximize software’s performance while decreasing execution times for programs.

Focusing on these objectives allows compiler design to allow developers to produce software that is fast, reliable and error free.

Programs, Compilation, and Translation in Complier Designe

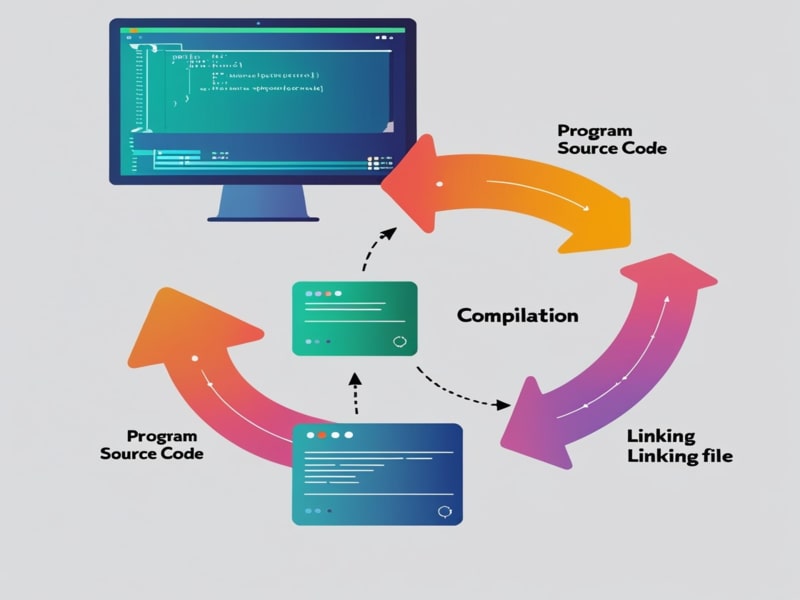

An application consists of many functions and files, such as DLL files (dynamic link libraries) or library files. A compiler plays an essential part in defining and compiling these files to ensure proper execution.

Pre-processor program editors play an essential part of the development process by translating one program to another – an essential step that ensures correct program execution while meeting user input correctly and faithfully.

Understanding the various kinds of programs, and their specific purposes and translation, helps create effective and functional software applications.

Understanding Sequential Execution in Multi-File Programs

Programmers need a thorough knowledge of sequential execution when writing programs using multiple files, along with understanding its importance in preprocessor applications.

Operating systems must recognize the execution sequence, beginning from one function and moving through them all in sequence.

Proper handling of include statements is key in ensuring seamless execution as this allows pre-processors to compile and runtime prepare your program efficiently.

Though execution appears sequential, it follows an organized and planned procedure linked to open system integration. A well-organized approach helps create functional programs.

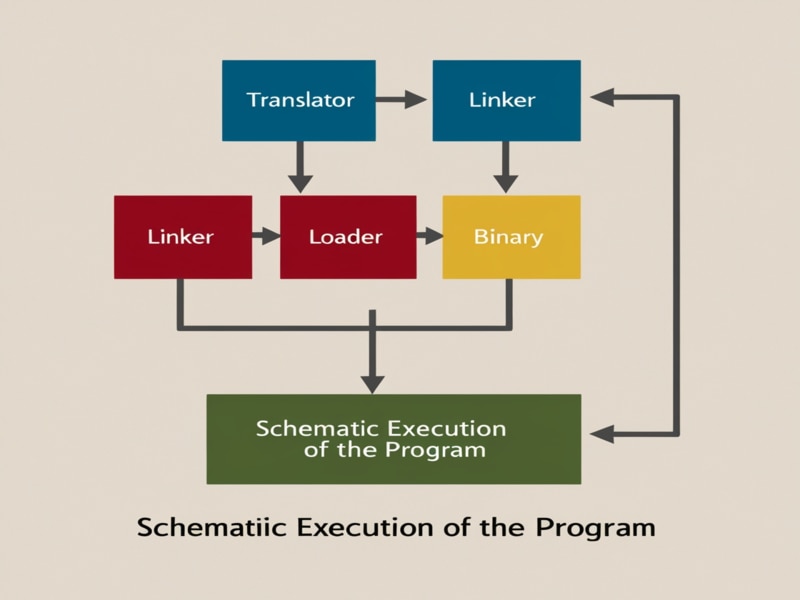

Role of Loaders and Linkers in Program Translation

Language translation depends heavily on the characteristics of its target language. A loader is an essential tool that is responsible for loading programs along with their modules and other essential components.

On an operating system, a loader oversees all phases of loading processes including bootstrap loader to ensure proper execution.

Loaders perform various roles. In C programming, for instance, loaders carry out relocation by changing address when required – guaranteeing both data and code are accurately loaded into memory.

Memory allocation and deallocation depend heavily on loader support, making this an integral component of translation. Linkers play an essential part as external references from files and programs are resolved.

Pre-processing uses the pre-processor to identify filenames; linking ensures that functions and variables are understood appropriately.

Management of variables involves knowing their locations and access points, particularly when calling functions like printf.

When calling such functions it is imperative that their availability and address can be found immediately; otherwise any discrepancies must be reconciled using linker commands.

Both functions and variables must be handled carefully when working with external variables like global ones. Locating their proper addresses ensures seamless execution.

A linker effectively and precisely handles these references to ensure an efficient and precise translation process.

Static and Dynamic Implementation of Linkers and Loaders

Linkers and loaders play an essential part in both compilation and execution processes, depending on their requirements for your program, they can either be implemented statically or dynamically depending on its needs.

Static loaders and dynamic loaders are two types of implementations used for loading programs; static loaders tend to be best-suited for dynamic loads while dynamic ones should primarily support static loads.

Both must support both dynamic and static linking for seamless program execution. Linkers may operate either statically or dynamically; modern systems often prefer hybrid approaches that combine these approaches.

Loaders also fall under this classification category. Their primary task involves carrying out loading duties just before an executable function takes place.

When an address becomes inaccessible, the loader ensures that its components are accurately loaded into memory.

The hybrid model provides greater adaptability, making it the optimal solution to meet various loading and linking needs.

Compiler Phases and Code Optimization

Compilers are tools used for writing software programs from their initial concept through execution and any possible exception handling situations where addresses cannot be located.

The compilation process typically consists of three key stages – intermediate code generation, code optimization and code optimization.

Compilers play an essential role in efficiently translating source code to executable code, with key features including intermediate code generation and optimization techniques to increase performance.

The compilation process encompasses five primary stages and, depending on optimization requirements, can involve two optimization stages, making a total of seven stages involved.

Effective compilation requires working with symbols stored in a symbol table, which also helps track new identifiers to ensure they can be recognized within your program.

An understanding of these parts and roles helps in efficient code management as well as ensures proper functioning of a compiler.

Complier Design Training

A Role for the Symbol Table in Compiler Design

A symbol table plays an essential part in programming language development by providing storage and management for multiple data types – including identifiers, variables and tokens – used during language creation.

As part of its initial usage during lexical analysis and subsequent compilation stages, this tool facilitates initial interaction and further analysis. Furthermore, stored data may be modified and reused while an error handler helps manage any potential mistakes during different compilation stages.

Error handling mechanisms within the compiler offer efficient ways of handling issues efficiently. Key information recorded in its symbol table includes lexeme types, token details, identifier classifications and variable assignments as well as line numbers and semantic information regarding data types.

Variable types are assigned during intermediate code generation to ensure their correct handling by the compiler. Lexemes are classified either as identifiers or keywords to reflect their function during compilation.

By organizing and managing data systematically, the symbol table improves programming efficiency while supporting error detection and resolution.

Code Execution, Token Analysis and Syntax Processing in Compiler Design

A program is executed repeatedly until its structure changes; every iteration changes into something different than before. Each identifier corresponds with one token for execution by the compiler.

Internal token names can include both user and compiler-identified entities; Lexeme Id1 functions as a placeholder token that does not exactly replicate original code structure, while Lexeme Ident1 correctly identifies expected tokens during compilation.

Index analysis can present its own challenges, such as excessive spaces or inconsistencies within an index code. A symbol table allows storing variable ID values such as Id1 or ID2.

These values are then utilized to generate new variables for subsequent iterations, with their values used to form new variables with subsequent iterations.

Maintaining an organized structure and eliminating unnecessary spaces ensure smooth execution. A system is employed to keep track of token sequences representing values using a symbol table as storage of said tokens.

Syntax analysis processes this data by creating a parse tree to validate token sequences and look for any discrepancies; any found instances trigger an error message and any unnecessary elements or characters may also be detected using syntax analysis.

A system may use syntax analysis for tracking line numbers or finding unnecessary components as part of this process.

Error handling mechanisms must consider multiple issues, including parse tree errors and computational limitations.

If the system cannot assign an acceptable value, an error message will be generated; any possible limitations, including computational power limits must also be evaluated prior to taking further actions.

C programs use syntax analysis to interpret input data using various parsing techniques such as top-down parsing and bottom-up parsing; regular expressions; deterministic finite automata (DFA); etc.

Pushdown automata (PDA) or deterministic pushdown parsing (DPD), are used as part of a stack-based approach for analyzing input structure and producing semantic output.

Semantic analysis plays an instrumental role in recognizing data types. Declared integers and characters are classified accordingly; all integers or all characters inputted will be recorded as integer types while strings inputted are recognized as string types.

Well-defined grammars define input behavior to guarantee accurate categorization. By integrating syntax and semantic analysis, the program processes input data systematically while verifying structure and meaning to ensure correct execution of each process step.

Importance of Type Information in Code Generation

Typographic data plays an essential part in producing optimized and efficient code. A code generator ensures that standardized examples with no syntax errors or inconsistencies are created while still remaining consistent over time.

Understanding internal rules and type assignments allows for improved intermediate code generation. The code optimization process strives to reduce unnecessary variables and operations as much as possible.

Code generators convert high-level code into equivalent assembly instructions while optimizing moves and computations for efficient performance. Where necessary steps don’t need to be added in an operation, optimizations like eliminating redundant moves and simplifying expressions may also be applied as necessary.

Maintaining type consistency throughout the code generation phase ensures efficient execution and prevents errors, while optimized code generation leads to performance gains by decreasing memory consumption and execution time.

Complier Design Online Training

Code Optimization and Compiler Phases in Compiler Design

Optimizing code involves refining its pre-address code in order to reduce redundant operations and analyze variable usage by the compiler to make sure only essential instructions are included in its output.

Code generators help in efficiently translating operations to machine-level instructions while remaining efficient. Incorporating Lexical Analysis is key in the compiler’s initial phase.

Theory-related elements such as tokenization, symbol table management and syntax validation contribute to an efficient compilation process. While semantic and syntax analyses further refine code before execution.

Compilation’s final phase involves producing optimized machine code while managing symbol tables efficiently, so developers need to understand each phase of compilation in order to increase programming efficiency and navigate code compilation smoothly.

Two-Pass Compilation and Machine-Dependent Code Generation

Two-pass compilers create machine-independent code by processing code in two stages; first structuring then validating.

This approach facilitates efficient division into modules, and promotes independent and dependent discovery processes. To assist this process, Intermediate CodeGeneration (ICG) and Code Generation (CG) components serve as synthesizers.

Two-pass analysis involves both analysis and synthesis; here a symbol table plays an essential part by holding all essential variable and identifier information that facilitates code optimization.

Machine-dependent code generation is also critical in producing precise assembly code, as this ensures compatibility between target machine and generated assembly code.

Without it, execution errors might arise and ultimately produce poor assembly code results. As assembly code depends heavily on machine-specific operations, an efficiently designed generator ensures efficient execution.

Role of RMD in Compiler Design and Syntax Analysis

RMD plays an essential role in compiler design and syntax analysis.

Producing a product involves several steps and transformations that occur throughout its production. At first, an object is created before gradually being reduced through handle reduction – known as handle downgrading – in an ongoing cycle.

This process continues until a desired final product, known as reduced action, has been attained. Understanding Rightmost Derivation (RMD) is fundamental in bottom-up parsing where derivations is performed without prior knowledge of all structure elements involved.

Comparatively, the top-down approach uses Leftmost Derivation (LMD), though its reverse process of Rightmost Derivation (RMD) may be conceptually less straightforward. By performing RMD first and then reverse it later on proved easier for most students than direct top-down methods.

Complier Design Course Price

Vanitha

Author